As we delve into the history of artificial intelligence, we find ourselves on a fascinating journey that spans decades of human ingenuity and technological breakthroughs. From the pioneering work of Alan Turing to the rise of ChatGPT, we’ve witnessed a remarkable evolution in machine intelligence.

This progression has impacted nearly every aspect of our lives, revolutionizing industries and challenging our understanding of what it means to be intelligent.

In this exploration, we’ll trace the path of AI development, starting with the theoretical foundations laid by visionaries like Turing.

We’ll examine early AI research, the emergence of expert systems, and the shift towards connectionist approaches. Our journey will take us through the rise of machine learning, advancements in natural language processing, and the game-changing transformer architecture.

Contents

The Theoretical Foundations of AI

Turing’s Contributions to Computer Science

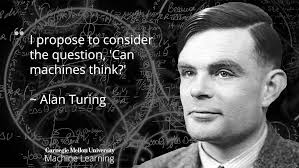

We explore AI’s theoretical foundations with Alan Turing’s groundbreaking work. In 1936, Turing invented the concept of a computer as part of his attempt to solve the Entscheidungsproblem, a complex mathematical puzzle. This led to the creation of the Turing machine, a mathematical model that serves as the basis for modern computers.

Turing’s machine consists of an infinitely long tape covered with symbols that provide instructions, allowing it to manipulate other symbols. This universal Turing machine became the foundation for modern computing and significantly impacted the field of artificial intelligence.

One of Turing’s most important contributions was demonstrating that some mathematical problems cannot be solved by algorithms, placing a fundamental limit on the power of computation. This concept, known as the Church-Turing thesis, has profoundly influenced computer science and AI.

The Church-Turing Thesis

The Church-Turing thesis, also called Church’s or Turing’s Thesis, is a fundamental hypothesis in computer science. It states that any real-world computation can be translated into an equivalent computation involving a Turing machine. This principle forms the foundation of modern computers and has significant implications for AI.

The thesis revolves around critical notions such as computation (solving specific problems through systematic procedures) and algorithms (sets of instructions used to perform computations). While the Church-Turing thesis is generally accepted as accurate, it’s important to note that it hasn’t been formally proven, as it’s essentially a statement about the physical world and theNaturee of ‘effective computabilitNaturehere are several variations of the Church-Turing thesis:

- The Extended Church-Turing Thesis: This version suggests that the Turing machine model efficiently captures all conceivable models of computation.

- The Strong Church-Turing Thesis: This iteration encompasses non-deterministic and quantum computation models, asserting that if a function is computationally solvable by any physical means, it can also be solved by a Turing Machine or equivalent computational model.

The Church-Turing thesis has a significant impact on AI and machine learning. It suggests that if a human intelligence process can be encapsulated as an algorithm, a machine can be programmed to replicate that process.

Information Theory and AI

Information Theory (IT) has become an essential component in the development of AI. With its strong theoretical and mathematically rigorous basis, IT has found applications in various scientific fields, including physics, communication theory, and dynamical systems.

Recently, there has been a push in academia to combine Information Theory with Machine Learning. This intersection has shown tremendous potential in enhancing classical machine learning algorithms. For instance, researchers have combined an information-theoretic distance metric with a classical ML algorithm – the k-nearest-neighbors (KNN) classifier – to demonstrate that even this simple classifier can outperform state-of-the-art Deep Learning models when combined with Information Theory.

The concept of Kolmogorov Complexity from Algorithmic Information Theory (AIT) has been instrumental in measuring the information distance between text strings. This approach allows us to quantify the information contained in a string based on its optimal summarized representation.

Early AI Research and Development

We begin exploring early AI research and development with a pivotal moment in the field’s history. In 1956, a groundbreaking event would shape the future of artificial intelligence.

The Dartmouth Conference

The birth of AI as an official discipline can be traced back to the summer of 1956, when a notable conference was held at Dartmouth College in Hanover, New Hampshire, USA. This event, known as the Dartmouth Summer Research Project on Artificial Intelligence, brought together some of the brightest minds in computing and cognitive science.

The conference was the brainchild of John McCarthy, a young Assistant Professor of Mathematics at Dartmouth College. McCarthy, dissatisfied with the focus of submissions to the Annals of Mathematics Studies journal, decided to organize a group to clarify and develop ideas about thinking machines.

The conference was based on a bold conjecture: “Every aspect of learning or any other intelligence feature can, in principle, be so precisely described that a machine can be made to simulate it”. This statement set the tone for the nascent AI field’s ambitious goals.

Notable figures such as Marvin Minsky, Nathaniel Rochester, and Claude Shannon were among the organizers. The event, funded by the Rockefeller Foundation, was a brainstorming session to explore whether machines could perform like humans.

While the conference didn’t go as planned, with only ten participants ultimately attending, its historical importance is unquestionable. At this conference, the term “Artificial Intelligence” was first coined, and many of the participants would make key contributions to the field in the following years.

General Problem Solver (GPS)

Following the Dartmouth Conference, one of the landmark achievements in early AI was the development of the General Problem Solver (GPS) in 1957 by Alan Newell and Herbert A. Simon. The GPS was an ambitious attempt to create a universal problem-solving machine that could tackle a wide range of problems using a set of heuristics and problem-solving techniques.

The GPS operated by representing problems and their associated knowledge in a symbolic form and applying problem-solving rules to manipulate these symbols. One of its key innovations was the use of means-ends analysis, a problem-solving strategy that involves:

- Identifying the differences between the current problem state and the desired goal state

- Applying operators to reduce these differences

- Breaking down complex problems into smaller sub-goals

- Continuously monitoring progress and making adjustments

The GPS was notable for its ability to learn from experience and adapt its problem-solving strategies through trial-and-error learning. While it could solve simple problems like the Towers of Hanoi, it struggled with real-world problems due to the combinatorial explosion of possibilities.

SHRDLU and Natural Language Understanding

Another significant development in early AI research was SHRDLU, a natural language understanding program created by Terry Winograd at the MIT Artificial Intelligence Laboratory between 1968 and 1969. SHRDLU demonstrated a simple conversation with a user via teletype about a small world of objects known as the “blocks world”.

What made SHRDLU special was how it combined four simple ideas to create a convincing simulation of “understanding”:

- A limited vocabulary of about 50 words, including nouns like “block” and “cone,” verbs like “place on” and “move to,” and adjectives like “big” and “blue”

- A primary memory to provide context for conversations

- Basic physics that made blocks fall over

- The ability to remember the names of things and how they were put together

SHRDLU could engage in dialogues, answer questions about the block’s world, and learn new concepts. For example, if told, “A steeple is a small triangle on top of a tall rectangle,” SHRDLU could answer questions about steeples and build new ones.

These early developments in AI research laid the groundwork for many of the advancements we see today, demonstrating the potential for machines to engage in problem-solving, language understanding, and learning. As we continue our journey through the history of AI, we’ll see how these foundational ideas evolved and expanded, leading to the sophisticated AI systems we have today.

Knowledge Representation and Expert Systems

As we delve deeper into the history of artificial intelligence, we encounter the fascinating realm of knowledge representation and expert systems. These advancements have been crucial in shaping today’s AI landscape networks.

We begin our exploration with semantic networks, a sophisticated knowledge representation method that has become integral to AI systems. At their core, semantic networks capture and structure knowledge by portraying it as a network of interconnected nodes and edges . This approach allows us to visually represent complex webs of entities and the relationships between them.

In a semantic network, nodes serve as the building blocks, representing entities, concepts, or objects. Edges, on the other hand, signify the relationships or connections between these nodes. If we think of nodes as nouns in our knowledge structure, edges act as the verbs, indicating the actions, associations, or attributes that link different concepts.

One of semantic networks’ primary strengths is their ability to represent complex relationships and concepts intuitively and naturally. This approach often corresponds more closely to our thought processes and understanding of the world, making it easier for both humans and machines to grasp complex knowledge structures.

Semantic networks have found applications in various AI-enabled tools. For instance, WordNet, an English-based lexical database, groups English words into sets of synonyms and organizes them into semantic networks. Another example is SciCrunch, a knowledge base of scientific resources that uses semantic data integration and information extraction techniques to assist biologists in generating hypotheses and explaining observed results from experiments.

Frames and Scripts

Building upon the concept of semantic networks, we move on to frames and scripts, which are conceptual structures used in AI to represent and reason about knowledge. Frames are data structures that represent stereotypical situations, objects, or concepts. They consist of a collection of related attributes, called slots, which store information about the described entity.

For example, a “restaurant” frame might have slots for the restaurant’s name, cuisine, location, hours of operation, and menu items. Frames capture the typical properties and relationships associated with a particular concept, allowing AI systems to make inferences and fill in missing information.

Scripts, conversely, represent sequences of actions or events that describe common, stereotypical scenarios. They provide a structured way to model and reason about typical real-world situations and the steps involved. For instance, a “restaurant dining” script might include entering, being seated, ordering food, eating the meal, paying the bill, and leaving.

Both frames and scripts have applications in various AI techniques, such as natural language processing, knowledge representation, and reasoning. They enable AI systems to understand the context and typical patterns associated with different concepts and situations, crucial for text comprehension, story understanding, and commonsense reasoning.

MYCIN and Medical Diagnosis

One of the most influential applications of knowledge representation and expert systems in AI’s history is in diagnosis. In the late 1970s, we witnessed the introduction of MYCIN, a groundbreaking consultation program that marked the beginning of AI’s impact on medicine.

MYCIN evolved from earlier systems like CASNET (Causal-Associational Network), which could use disease data, apply it to individuals, and advise physicians on how to help patients manage diseases. The evolution of MYCIN to EMYCIN and then to INTERNIST-1 in just a few years showcased the rapid progress in AI’s medical knowledge and its ability to assist primary care physicians (PCPs).

However, the induction of DXplain in 1986 revolutionized AI in medicine. This program allowed PCPs to input their patient’s symptoms, and in response, it provided a diagnosis along with a description of the disease and additional references for the physicians. Starting with 500 diseases, DXplain has now expanded to cover over 2,400 conditions, demonstrating the vast potential of AI in enhancing medical diagnosis and treatment.

These advancements in knowledge representation and expert systems have laid the foundation for the sophisticated AI applications we see in healthcare today, showcasing the transformative power of AI in improving patient care and medical decision-making.

The Connectionist Approach

Perceptrons and Neural Networks

We begin our exploration of the connectionist approach with the groundbreaking work on perceptrons, which laid the foundation for modern neural networks. In 1959, Frank Rosenblatt brought the perceptron to Cornell University’s Ithaca campus, becoming becamector of the Cognitive Systems Research Program. The perceptron, a single-layer neural network, was designed to classify the input into two possible categories, making predictions and adjusting itself to improve accuracy over numerous iterations.

Rosenbatt’s work sparked both excitement and scepticism in the scientific community. His contemporary, Marvin Minsky, a grade behind Rosenblatt at the Bronx High School of Science, became a vocal critic of the perceptron’s potential. Their public debates at conferences captivated their colleagues and students, highlighting the intense interest and controversy surrounding this new approach to artificial intelligence.

Parallel Distributed Processing

As we delve deeper into the connectionist approach, we encounter the concept of parallel distributed processing (PDP), which has become a cornerstone of modern AI systems. PDP is a type of computing where multiple processors work together to complete a task, with each processor having its local memory and focusing on a specific part of the problem. This approach has proven particularly effective for tasks divided into smaller components, such as image processing and weather forecasting.

The idea of parallelism in computing has ancient roots. Still, it wasn’t that researchers could fully explore the potential advent of modern computers that first proposed the concept in 1948, which was further developed by scientists like John McCarthy and Marvin Minsky in the 1950s and 1960s. Today, PDP has found applications in various domains, from artificial intelligence algorithms to drug discovery research, enabling us to tackle incredibly complex tasks that would be impossible or extremely time-consuming using traditional methods.

Backpropagation and Deep Learning

The development of backpropagation marked a turning point in the history of artificial intelligence, effectively ending the “AI winter” and ushering in a new era of innovation. In 1986, Geoffrey Hinton, David Rumelhart, and Ronald Williams published a groundbreaking paper in Nature titled “Learning representNature by back-propagating errors,” which introduced an efficient method for trainingNatureptimizing neural networks.

Backpropagation, or “backdrop,” is an algorithm that enables the training of deep neural networks by allowing them to learn from their errors and improve over time. This breakthrough has had far-reaching implications for the field of AI:

- It significantly enhanced machine learning capabilities, enabling the development of more sophisticated and capable models.

- It paved the way for deep learning, a subset of machine learning involving deep neural networks, which is responsible for some of the most notable AI advancements in recent years.

- It opened up numerous practical applications, from image and speech recognition to developing sophisticated recommendation systems.

- It rekindled interest in AI, leading to renewed research, funding, and commercial interest.

Today, backpropagation remains the backbone of modern AI, powering large language models with billions of parameters and driving the most significant breakthroughs in the field.

Machine Learning Paradigms

We’ve witnessed a remarkable evolution in machine learning paradigms, each offering unique approaches to tackle complex problems. Let’s explore the critical paradigms that have shaped the field of artificial intelligence.

Supervised Learning

In supervised learning, we work with a fully labelled dataset, where each example is tagged with the desired output. This approach is beneficial for classification and regression problems. We train the algorithm in classification tasks to predict discrete values, identifying input data as members of particular classes or groups. For instance, in a dataset of animal images, each photo might be pre-labelled as a cat, koala, or turtle.

Regression problems, on the other hand, deal with continuous data. A classic example is linear regression, where we predict the expected value of a dependent variable based on an independent variable. The ultimate goal of supervised learning is to build a model that generalizes well on future unseen data, which raises concerns about potential overfitting.

Unsupervised Learning

Unsupervised learning presents a different challenge. Here, we hand the deep learning model a dataset without explicit instructions on what to do with it. The neural network then attempts to automatically find structure in the data by extracting useful features and analyzing its structure. This approach is beneficial when we’re asking questions we don’t know the answers to.

We can organize data in various ways using unsupervised learning:

- Clustering: Group similar data points together, like separating bird photos by species based on features like feather colour, size, or beak shape.

- Anomaly detection: Identifying outliers in a dataset, such as detecting fraudulent transactions in banking.

- Association: Predicting correlations between features in a data sample, like recommending related products in online shopping.

- Autoencoders: Compress input data into a code and then attempt to recreate the summarised code’s input.

Semi-supervised and Transfer Learning

Semi-supervised learning strikes a balance between supervised and unsupervised approaches. We work with a dataset that contains both labelled and unlabeled data, with a larger proportion of the latter. This method is particularly useful when extracting relevant features from the data, which is difficult, and labelling examples is time-intensive for experts.

Transfer learning, a powerful technique in machine learning, allows us to apply knowledge gained from one task to a different but related problem. We start with a pre-existing model trained on a vast dataset and then fine-tune it for a specific task. This approach has become increasingly popular, especially in natural language processing and computer vision.

One fascinating application of transfer learning is in medical imaging. Models trained on general images can be fine-tuned to recognize specific cancer cells or understand the nuances of medical X-rays or MRIs. This technique has also found applications in understanding accents and dialects by fine-tuning models on regional voice samples.

As we continue to advance in machine learning, these paradigms evolve and intersect, leading to more sophisticated and efficient AI systems. The paradigm choice depends on the Nature of the problem, the availaNatureta, and the specific requirements of the task.

Natural Language Processing Advancements

N-gram Models and StatiNature NLP Over the years, we’ve witnessed remarkable advancements in Natural Language Processing (NLPs, with n-gram models playing a crucial role in its early development. N-grams are contiguous sequences of ‘n’ items from a given text or speech, providing a way to capture the local context in a sentence. For instance, in a bigram model, the sentence “I love ice cream” would be split into pairs: [“I love”, “love ice”, “ice cream”].

N-gram language models assign probabilities to sequences of words, allowing us to predict upcoming words or estimate the likelihood of entire sentences. This capability has proven invaluable in various NLP applications, such as grammar correction, speech recognition, and augmentative and alternative communication systems.

The rise of statistical methods in NLP marked a significant shift. These methods leverage the probabilities of word occurrences and co-occurrences to predict the next word or understand the meaning of a sentence. For example, a statistical model might determine that the likelihood of the word “rain” following “It might” is higher than that of the word “apple.”

Word Embeddings and Distributional Semantics

As we delved deeper into NLP, we encountered the fascinating realm of word embeddings and distributional semantics. Word embedding is a technique that transforms words into continuous, dense vector representations, often in high-dimensional spaces. These vectors capture semantic similarities between words, allowing machines to understand their meaning and context.

Word embedding models such as Word2Vec and GloVe gained popularity as they consistently outperformed traditional Distributional Semantic Models (DSMs). Word2Vec, one of the most widely used word embedding algorithms, train neural networks to predict the surrounding words (continuous bag of words, CBOW) or the target word given its context (skip-gram) within a window of words.

GloVe (Global Vectors for Word Representation) combines the advantages of count-based and predictive approaches. It constructs a co-occurrence matrix to capture the word-context relationship and then applies matrix factorization techniques to obtain word embeddings.

Sequence-to-Sequence Models

In recent years, we’ve witnessed a revolutionary breakthrough in NLP with the development of Sequence-to-Sequence (Seq2Seq) models. These models have transformed various NLP tasks by enabling the conversion of sequences from one domain to another, offering solutions to machine translation, text summarization, speech recognition, and more.

At its core, a Seq2Seq model consists of two main components: an encoder and a decoder. The encoder takes the input sequence and compresses it into a fixed-size vector, often called the context vector or the thought vector. The decoder then generates the output sequence by predicting one token at a time, using the context vector as its initial state.

Introducing the attention mechanism is one of the most significant advancements in Seq2Seq models. This mechanism enables the model to capture the contextual relationship between the input and output sequences, improving the quality of the generated translations or summaries.

We’ve seen Seq2Seq models find widespread application across various NLP tasks, showcasing their versatility and effectiveness. Architectures like the Transformer have emerged as state-of-the-art solutions for machine translation tasks, leveraging self-attention mechanisms to capture long-range dependencies.

The Transformer Revolution

With the advent of the transformer architecture, we’ve witnessed a remarkable transformation in Natural Language Processing (NLP). This revolutionary approach has fundamentally changed how we process and understand language, leading to significant advancements in various NLP tasks.

Attention Mechanisms

At the heart of the Transformer revolution lies the attention mechanism. This innovative approach allows models to focus on specific input parts when performing a task, dynamically assigning weights to different elements based on relevance. By incorporating attention, models can effectively capture dependencies and relationships within the data, particularly in tasks involving sequential or structured information.

The attention mechanism has proven particularly valuable in machine translation. It enables models to focus on different parts of the source sentence when generating each word in the target sentence, significantly improving translation quality. This capability has also enhanced performance in other NLP tasks such as sentiment analysis, question answering, and named entity recognition.

BERT and Bidirectional Context

Building upon the attention mechanism, BERT (Bidirectional Encoder Representations from Transformers) emerged as a game-changer in NLP. Introduced by Google AI in 2018, BERT revolutionized language understanding with its ability to process text bi-directionally. This bidirectional approach allows BERT to capture relationships between words with unprecedented precision, overcoming the limitations of traditional sequential processing.

BERT’s training process involves two phases:

- Pretraining: BERT is trained on a massive corpus of text, learning to predict missing words in sentences. This forces the model to develop a deep semantic understanding of language.

- Fine-tuning: BERT is adapted to specific NLP tasks after pretraining, allowing it to apply its pre-trained knowledge to various applications.

This approach has significantly improved tasks such as question answering, sentiment analysis, and named entity recognition. BERT’s contextual word embeddings enable it to understand word polysemy and perform word sense disambiguation effectively.

GPT Models and Few-Shot Learning

While BERT excels in understanding context, GPT (Generative Pre-trained Transformer) models have pushed the boundaries of text generation and few-shot learning. GPT models, particularly GPT-3 with its 175 billion parameters, have demonstrated remarkable task-agnostic, few-shot performance capabilities.

GPT-3 has shown strong performance on many NLP datasets, including translation, question-answering, and cloze tasks, without any gradient updates or fine-tuning. This ability to perform new language tasks from just a few examples or simple instructions marks a significant step towards more human-like language processing.

The few-shot learning capabilities of large language models like GPT-3 have made deep learning accessible to a broader audience. Environmental professionals and the general public can now achieve new machine-learning results using plain-text instructions without requiring formal skills in traditional machine learning or data science.

For instance, in a sentiment analysis task on tweets related to climate change communication, ChatGPT (based on GPT-3.5) achieved 90% accuracy with just three example prompts, demonstrating the power of few-shot learning in real-world applications.

The Transformer revolution, encompassing attention mechanisms, BERT’s bidirectional understanding, and GPT’s few-shot learning capabilities, has reshaped the landscape of NLP. These advancements have improved the performance of various language tasks and democratized access to sophisticated AI technologies, opening up new possibilities for researchers, professionals, and enthusiasts across diverse fields.

The journey of artificial intelligence from Turing’s theoretical foundations to today’s advanced language models has transformed our understanding of machine intelligence. This evolution has impacted nearly every aspect of our lives, causing an industrial revolution and challenging our perception of intelligence. The advancements in AI, from early problem-solving systems to sophisticated neural networks and transformer models, have opened up new possibilities for solving complex problems and processing natural language.